Update: There were some bugs in the code here, which have been fixed. If you were using the older version of this script on your site, you should update it.

Also, a lot of people have got back to me about them getting undefined symbols, so I've highlighted parts of this post that are absolutely critical for it's functioning.

Steve Souders, creator of YSlow, author of the book High Performance Web Sites

, is back in action writing a book titled Even Faster Websites

. In it he details what one can do after the 14 YSlow rules (laws?) have been implemented, and you still want better better performance.

6 days ago, Steve gave a talk at SXSW Interactive, the slides of which are available on Slideshare. In it, he goes on to suggest (slide 27), that we should load scripts without blocking

. To me, this seems like a very interesting idea, and based on his hints in the next few slides, I started experimenting.

Let me back up a little. When browsers download scripts, they block all other resources from downloading until such time that the script has finished downloading. When I mean all other resources, it includes even other CSS files and images. There are several ways to reduce the impact of serial script download, and the High Performance Websites

book makes a couple of good suggestions.

- Rule 1 - Make Fewer HTTP Requests

- Rule 5 - Put Stylesheets at the Top

- Rule 6 - Put Scripts at the Bottom

- Rule 8 - Make JavaScript and CSS External

- Rule 10 - Minify JavaScript

There are several others, mostly around caching strategies and reducing HTTP overhead, but these alone reduce the impact of serial script download significantly.

Now, if you haven't already taken care of these rules of optimization, my suggestion will be hard to implement, or will reap no benefits. You can stop reading now, and go back to your site to optimize your code.

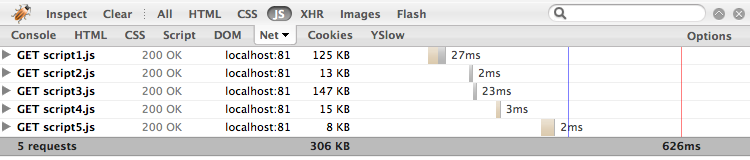

Regular <script> tags:

See that waterfall pattern

of file downloads? Nothing else is downloaded when a script is being downloaded, not even other scripts. The time gap between successive downloads is the time taken to evaluate the script, roughly proportional to the size of the script. Notice also that this takes in excess of 600ms even when the files are being served from my local machine, effectively having near-zero network latency.

With script parallelization

Now, this looks much better, doesn't it? Not only has everything downloaded in parallel, it has taken less than half the time for the same size of files. In fact, you will notice that some noise when recording this data made the files take longer to download in this case, yet the total time was less than half of the pervious case.

So, how did I do this? Read on...

One script tag to rule them all

Reading into Souders' suggested techniques (If you still have that slideshow open, go to slide 26), I decided that what I wanted was:

- I want the browser busy indicators to show,

- I want a script that doesn't care if I'm downloading from the same domain or not, and

- I wanted to preserve the evaluation order in case of interdependent scripts.

So, I came up with the following little script:

<script type="text/javascript">

(function() {

var s = [

"/javascripts/script1.js",

"/javascripts/script2.js",

"/javascripts/script3.js",

"/javascripts/script4.js",

"/javascripts/script5.js"

];

var sc = "script", tp = "text/javascript", sa = "setAttribute", doc = document, ua = window.navigator.userAgent;

for(var i=0, l=s.length; i<l; ++i) {

if(ua.indexOf("Firefox")!==-1 || ua.indexOf("Opera")!==-1) {

var t=doc.createElement(sc);

t[sa]("src", s[i]);

t[sa]("type", tp);

doc.getElementsByTagName("head")[0].appendChild(t);

} else {

doc.writeln("<" + sc + " type=\"" + tp + "\" src=\"" + s[i] + "\"></" + sc + ">");

}

}

})();

</script>

It's a little ugly: There's a user-agent sniff happening in there and I've written it so as to use the least number of bytes possible (save for the indentation), but it works, and has no dependencies on any library. Just modify the array on top to place your list of scripts you wish to include, and let the rest of the script do the dirty work. If you need to include scripts from another domain, just use an absolute path.

This code was written keeping in mind that this should be the only piece of on-page inline JavaScript. This can then go on and fetch the script required in parallel, and those in turn can unobtrusively do their thing.

This works as advertised in IE and FF. I haven't tested the parallelization in other browsers, but at least it fails gracefully and preserves script execution order. This has now been tested in Safari, Chrome and Opera as well, and works as advertised everywhere.

Find this interesting? Follow me on twitter at @rakesh314 for more.

22 comments:

Hi Rakesh,

nice script, well done!

I tested it on IE7 and FF3.0.9 on Windows Vista. The scripts load in parallel, order is preserved, scripts on 2 domains. The busy indicator at the mouse pointer in FF *does not* shows until all scripts are loaded. In IE7, the busy indicator does not appear.

Imo, not a big issue.

Just wondering, would adding a callback for each script be difficult?

Would make your script awesome.

- Aaron

Thanks, Aaron.

Callbacks are a great idea, but I decided not to use that approach here. Consider the case of JS libraries that you might want to include - say jquery. To add a callback to the jquery library is not a good idea since you are modifying the library itself. This doesn't let you load from the Google Ajax APIs for example. Also, upgrading the library becomes hard to do. Hence I dumped the callback approach.

Let me know what you think. If you think otherwise, I might consider adding a callback externally to this script.

Please note, that the new Firefox 3.1 will also support defer! You should expand your script with the support of Firefox 3.1. Good article, thanks!

Dude this is awesome!! Thanks for sharing.

I have a suggestion, wont it be better if you have assignment and conditional statement above the for loop? well you end up repeating the code but still you need no do assignment or conditional check each time.

Example:

var sc = "script", tp = "text/javascript";

if(window.navigator.userAgent.indexOf("MSIE")!==-1 || window.navigator.userAgent.indexOf("WebKit")!==-1) {

for(var i = 0, l = s.length; i < l; i++){

document.writeln("<" + sc + " type=\"" + tp + "\" src=\"" + s[i] + "\" defer></" + sc + ">");

}

}

same on the else as well.

Hey Prashanth,

Not sure if your suggestion makes any measurable difference. However that could be argued, so I decided to modify my script to incorporate your change.

Cheers!

Hi Rakesh,

Why are you sniffing for browsers?

Does Firefox not support document.write()?

Cheers,

Yesudeep.

It's not that Firefox doesn't support it. It's that it doesn't cause the downloads to happen in parallel.

Yesudeep made a couple of points on his blog post about this code, almost all of which I disagree with. However, he made two excellent points about the loop. (1) That the scope chain lookup requires to go up to the global scope, and (2) that there are loop invariant computations being made in the loop.

I have made code changes to address point 1 above, by caching global scope variables in the local scope to reduce the scope chain lookup, making the loop theoretically faster. I am currently not fixing 2 since it not only increases code size, it also reduces readability.

If you are looping too much, it means you have a lot of JS to files to download, which itself you should rethink.

Hi Rakesh,

I'm pretty sure you have your reasons to disagree, and that's appreciated.

Certainly, you wouldn't want to include a ton of scripts into your code. Minimizing the number of HTTP requests made by combining scripts is surely a production tip. However, when you are testing code on a development server, it really doesn't make sense to have all of them combined as debugging them then becomes painful (ref. error foobar on line 9456 in entire.library.js or line 1 in entire.library.min.js).

Anyway, including a lot of scripts wasn't my point in the blog post if you noticed.

Factoring out loop invariants and caching access to global variables does speed up code and to my mind, there's no reason to not do this. As for the number of bytes consumed over-the-wire, do you really think t[sa] makes a difference for example? If you're using gzip compression to serve your script files, and I'm sure you would, t.setAttribute, t.setAttribute, t.setAttribute, ... becomes a non-issue. There don't appear to be any benefits doing that, for example.

For arguments sake, if you're using a UNIX or Linux, you can try sticking this list of words into a file:

Apple

Google

Microsoft

Orange

Mango

Tomato

Mongo

China

Charlie

Delta

Gamma

Beta

and run `gzip filename.txt` on that.

Now try that on this input:

Apple

Apple

Apple

Apple

Apple

Apple

Apple

Apple

Apple

Apple

Apple

Apple

Apple

Apple

Apple

Apple

Apple

Apple

Apple

Apple

Apple

Apple

I'm pretty sure you'll see a large difference.

var sa = 'setAttribute';

t[sa](foo, bar); is surely harder to read than t.setAttribute(foo, bar);. Don't you agree? :-)

Additionally, look at this line:

doc.getElementsByTagName("head")[0].appendChild(t);

This is inside a loop. doc.getElementsByTagName("head")[0], again is a loop invariant (it fetches the same DOM element per iteration and DOM is pretty damn slow!), and can be pretty easily factored out of it.

Also, which version of Firefox or Opera do you see doesn't download scripts in parallel when we use document.write()? As I said, I do not have access to to Firefox 2.0/Opera 9.x or earlier and IE. With the latest release of both browsers, document.write() does cause the browser to fetch scripts in parallel.

I'd appreciate any help with this and would be more than happy to document them and incorporate them into my version as well. Thanks for taking the time for answering. :-)

Cheers,

Yesudeep.

Yesudeep,

You are talking about the benefits of readability vs. compression, and in itself it's a worthy topic of discussion. I chose to compromise on readability a little so that it can compress better. Since sa is now just a variable, and not a public member of an element, t[sa] compresses better with say shrinksafe since it doesn't have to preserve global symbols with respect to method references.

This script is about on-the-wire performance optimization, and I didn't want a bootstrap itself to add too much baggage. Gzip is a layer that is added later, and will give its benefits in either case. Depending on the context, I generally disagree with relying on gzip as a crutch for handling code bloat.

[digression]

IIRC, Gzip's Hoffman algorithm is based on the frequency of repetition of characters in the English alphabet, and not on the frequency or number of characters in the file being compressed. This is why binary files don't compress well with Gzip. Gzip is a one pass algorithm. If it had to take into account multiple occurrences within the same file, it would at least be a two pass algorithm. So, repetition of text in the file in itself doesn't help Gzip one bit. Correct me if I'm mistaken about this.

[/digression]

You are right again about the getElementsByTagName() call being a loop invariant. However, you are probably being too critical about the performance implications. Firstly, getElementsByTagName is not an expensive a call at all - it's implemented natively and doesn't cause any browser redraw behavior. The appendChild does do a DOM manipulation, but it can't be avoided. (Well, it can be batched up for performance, but that increases code size as well. It's a fine line.)

Besides, and like I said before, if you are doing more than a very few script includes, you are doing it wrong. I would say that even iterating 5 times is excessive. The benefits of optimizing a loop which will run so few times and does so little within it is of very diminishing value. I'd err on the side of compact concise code.

About the use of document.write vs. script tag injection, I wonder if you got the chance to look at Steve Souders slides that I have linked above. Check out the discussion on (and leading up to) slide 25. You should get a sense of the reason I made the choice to browser sniff. Look especially at the parallel download capabilities and the abilities to preserve script execution order.

Hope this helps.

About gzip encoding:

-rw-r--r-- 1 yesudeep yesudeep 652 2009-07-29 16:51 rakesh.js

-rw-r--r-- 1 yesudeep yesudeep 513 2009-07-29 16:58 rakesh-min.js

-rw-r--r-- 1 yesudeep yesudeep 338 2009-07-29 16:56 rakesh-min.js.gz

-rw-r--r-- 1 yesudeep yesudeep 659 2009-07-29 16:54 yesudeep.js

-rw-r--r-- 1 yesudeep yesudeep 513 2009-07-29 16:58 yesudeep-min.js

-rw-r--r-- 1 yesudeep yesudeep 334 2009-07-29 16:56 yesudeep-min.js.gz

The only difference is I've removed t[sa] references, removed the definition for sa, and used .setAttribute directly instead. :-)

The same size when only minified using the YUI Compressor.

An impressive 4 bytes shorter when gzipped! Ok, kidding. Not so impressive, but in a large script this can make a huge difference.

var s = [

"/javascripts/script1.js",

"/javascripts/script2.js",

"/javascripts/script3.js",

"/javascripts/script4.js",

"/javascripts/script5.js"

];

var sc = "script",

tp = "text/javascript",

doc = document, ua = window.navigator.userAgent;

for(var i=0, l=s.length; i<l; ++i) {

if(ua.indexOf("MSIE")!==-1 || ua.indexOf("WebKit")!==-1) {

doc.writeln("<" + sc + " type=\"" + tp + "\" src=\"" + s[i] + "\" defer></" + sc + "<");

} else {

var t=doc.createElement(sc);

t.setAttribute("src", s[i]);

t.setAttribute("type", tp);

doc.getElementsByTagName("head")[0].appendChild(t);

}

}

This explains GZip encoding:

http://code.google.com/speed/articles/gzip.html

And I can pretty much see the same example in there that I wrote about earlier.

I agree, we have different design goals.

While you're trying to minimize the space used, I'm being more focused on the speed of execution while not losing too much out on space.

Yesudeep, that is not my design goal - just a consideration. The design goal is to make script downloads in parallel across domains and preserve script execution order. The script does that well across all browsers I've tested. It doesn't give undefined symbol references at all. Not meaning to flame at all, but your script doesn't achieve all of this.

In particular, your script doesn't preserve script execution order, giving rise to the possibility of undefined symbol errors if your scripts are interdependent.

BTW, the 4k from your results is not minor savings at all. It all adds up VERY quickly, especially at the scale at which these kind of optimizations are required in the first place.

Since you still mention speed of execution, I would love to see some benchmark pointing out the difference in a realistic case of say max 10 files.

That's simply great!

We're finding that on FF, the append to head mechanism fails if the server drops the javascript request. In that case, the javascript will hang the browser. You can test this by doing a parallel download of a javascript file and then set iptables on the server to drop requests from your browser on both the input and output chains.

A long....and interesting discussion. I just have and idea to add...

If we divide the design into two components loader and initializer we can not only load all the required scripts in parallel and also fire a custom event that can fire a callback or load another script.

That way, for example I need to initialize 2-3 image galleries on a screen depending on some condition I can load the script to create the galleries and after it has loaded I can fire initializer for the galleries.

YUI has a loader incorporated in it that does fire a load completed event.

http://developer.yahoo.com/yui/yuiloader/

IE should support on ready state change event, FF supports onload for Scripts as well as LINK tags.

(just mentioning)

Another interesting scenario which can come up as a direct inference - Does it make sense to create a script (with minimal performance hit) that can manage all the JS and CSS files loaded on the browser at a time. Can this library design have capabilities to reload on demand or maybe also remove scripts?

Lab.js http://labjs.com/ is also an interesting example on these lines

Any comments/views on this thought process will be accepted.

PS. I think I need to mention here that I am not trying to criticize any approach in any way. I myself used the code (with some tinkering ) that Rakesh showed here in a released project.

Hi Everyone,

Please how can I use this code on a WordPress site to make the scripts load in Parallel?

Thanks,

Richie.

WARNING: The FF and Opera behavior upon which the execution order preservation is built is BROKEN IN FF 4 and Opera 10+

You can accomplish parallel loading in Internet Explorer without the need for document.write using onload-chaining. Much like an Image() element, IE will fetch the URL assigned to the SRC attribute of a script even if it's not yet inserted into the DOM. Instead of inserting the elements immediately, add them to a queue. Then add an onreadystatechange handler that pulls the next script in the queue and loads it.

http://digital-fulcrum.com/webperf/orderedexec/

Vote for these two bugs to have the same behavior added to FF and WebKit

https://bugs.webkit.org/show_bug.cgi?id=51650

https://bugzilla.mozilla.org/show_bug.cgi?id=621553

Hello, great work but the script doesnt work with firefox. Can you update it please? And is it possible to add a non blocking javascript ?

I gotta bookmark this website it seems very beneficial. Thank you! https://www.personaltrainersherwoodpark.com/

Thanks for this tips. I really appreciate it. indianapolis asphalt driveway

Post a Comment